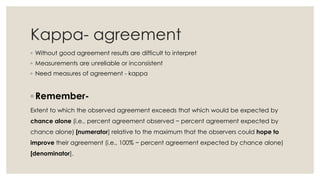

Agree or Disagree? A Demonstration of An Alternative Statistic to Cohen's Kappa for Measuring the Extent and Reliability of Ag

Percent agreement and Cohen's kappa values for automated classification... | Download Scientific Diagram

Agree or Disagree? A Demonstration of An Alternative Statistic to Cohen's Kappa for Measuring the Extent and Reliability of Ag

Item level percentage agreement and Cohen's kappa between TAI and TAI-Q... | Download Scientific Diagram

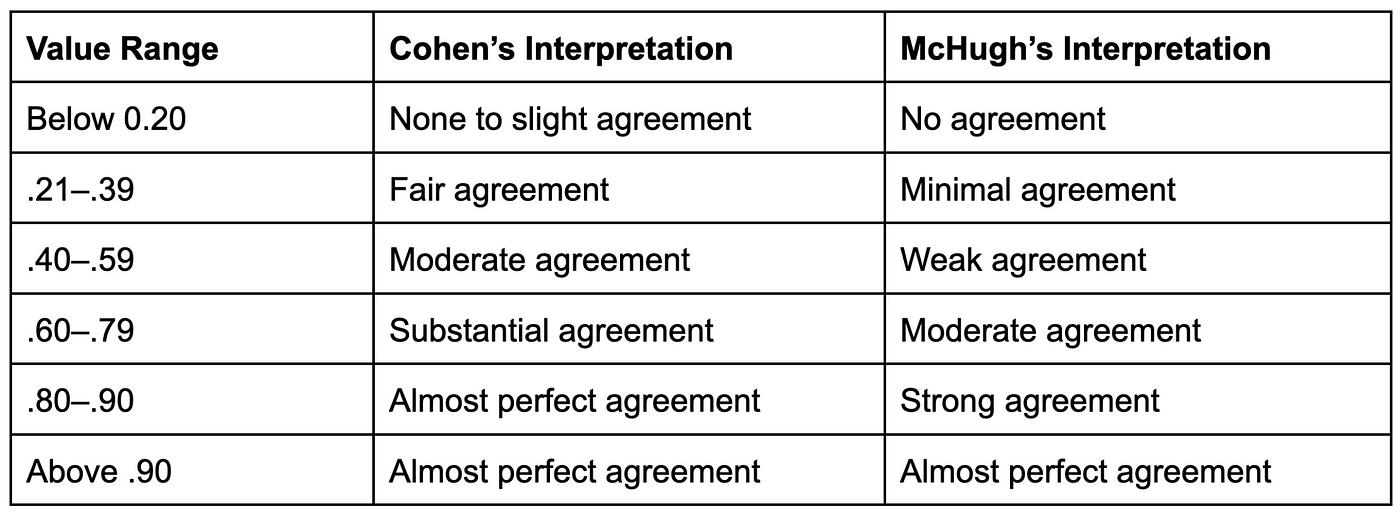

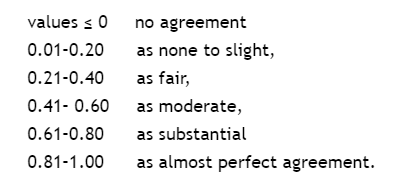

Interpretation of Kappa Values. The kappa statistic is frequently used… | by Yingting Sherry Chen | Towards Data Science

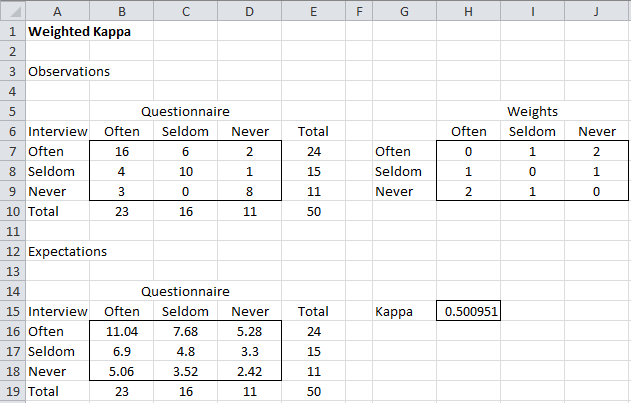

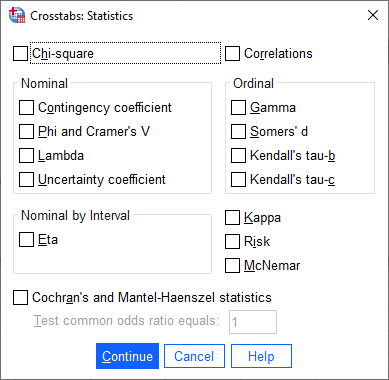

Cohen's kappa in SPSS Statistics - Procedure, output and interpretation of the output using a relevant example | Laerd Statistics

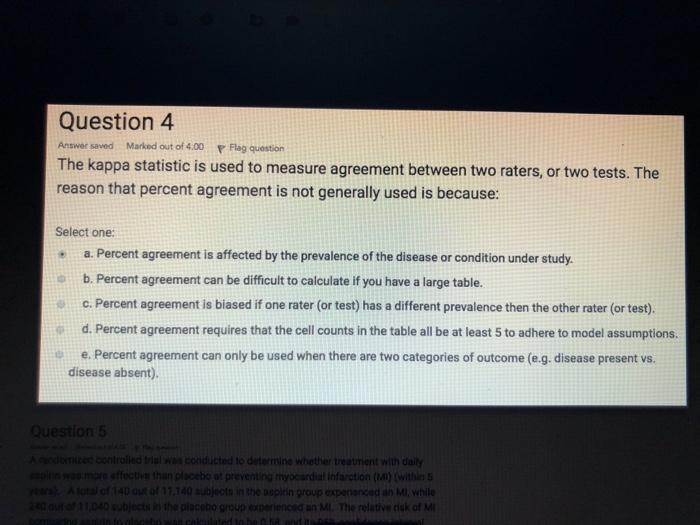

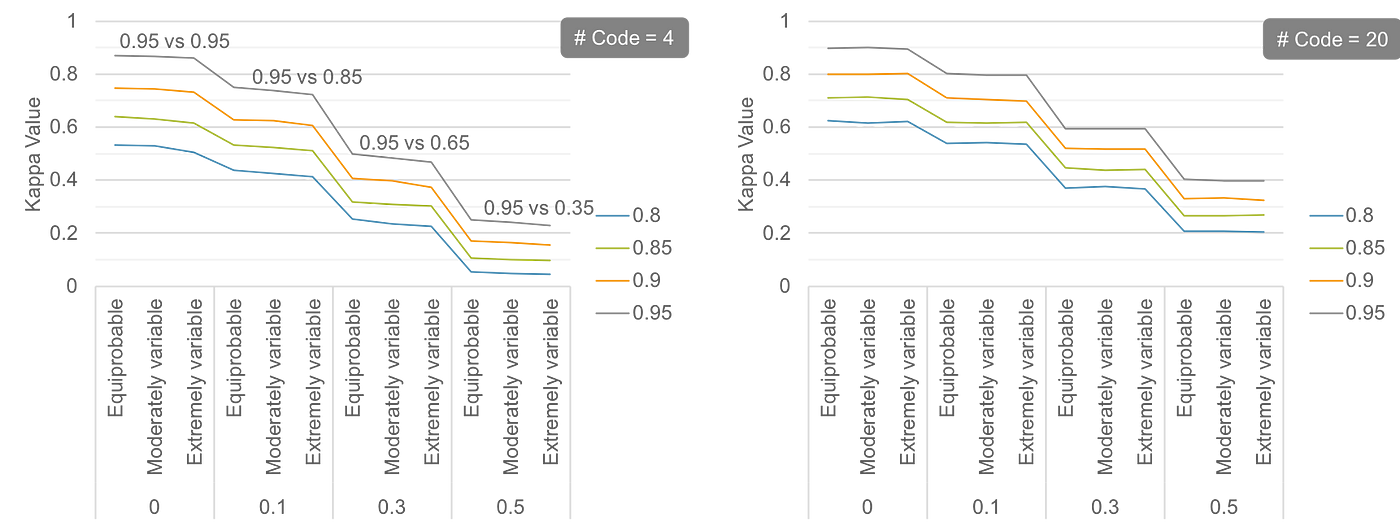

Percent Agreement, Pearson's Correlation, and Kappa as Measures of Inter-examiner Reliability | Semantic Scholar

Percent Agreement, Pearson's Correlation, and Kappa as Measures of Inter-examiner Reliability | Semantic Scholar